The Human Factor: Why Usable Security is Critical for the Power Grid

Most things in life involve trade-offs. Living in a large city, one might have a trade-off between the convenient accessibility of the public infrastructure and services versus the more crowded space and perhaps higher level of pollution and noise.

Chair of the Study Committee of Information Systems, Telecommunications, and Cybersecurity (D2), CISSP, CISM

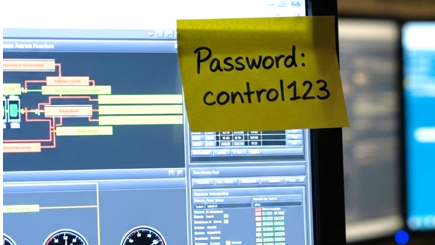

In cybersecurity, the trade-off often involves the act of balancing between convenience and security. Ask your users to change their password every 30 days, and you will end up with annoyed users who are just trying to get on with their work by resorting to “workarounds”. Human nature is such that shortcuts will be taken if faced with the immediate pressure, such as writing down the password somewhere after it has been changed.

This has the counterproductive effect of not increasing security but decreasing it. It is for this reason that the cybersecurity industry has shifted to recommending only changing passwords where there is an indicator of compromise. Mandatory periodic changes, if required, should be a long duration, such as changing passwords every year.

I can think of other examples in cybersecurity where best practices have changed over time in favour of either convenience or simplicity. Table 1 shows some of these practices.

| Retired Best Practice | Current Best Practice |

|---|---|

Changing passwords regularly and periodically, e.g. every 30 days.

This forces the user to circumvent by using tricks such as incrementing digits, or slight variations of the password. | Don’t enforce periodic password expiry.

Change passwords on evidence or suspicion of compromise. [1][2][3]

If mandatory periodic changes are required, specify a sensible period, such as once a year.[3]

On systems where standards (e.g., CIP-007-6 R5) mandate rotation for password-only interactive access, comply while migrating to phishing-resistant MFA/certificates. |

Enforcing password complexity rules (combination of special characters, spaces, alphanumeric), e.g. JN2kn3XC#2$!d.

This forces the user to write down the password somewhere since recall is next to impossible. | Use random passphrases, e.g. Cocoon Bead Dysphoria.[4]

On systems where standards (e.g., CIP-007-6 R5) mandate password complexity, comply while reducing those cases via MFA/certificates. |

Blocking clipboard operations (copy-and-paste), for example on login screens or web forms or terminals – this also blocks the automated copy-paste of password managers.

This forces users to write down the password somewhere especially if the password is complex. | “Support copy and paste functionality in fields for entering passwords, including passphrases.” (NIST) [5] |

Super short idle-user session timeouts, forcing users to re-login. e.g. 5 minutes.

This forces the user to write it down somewhere. | Use sensible idle timeouts, balancing the risks, for example, 15 minutes when accessing sensitive information, and 1 hour for less critical systems.

|

Hide Wifi SSID. | Do not hide wireless SSIDs. Hidden SSIDs are trivially discovered by adversaries and introduce user-friction without real benefit in security [6]. |

Use MAC address filtering on wireless access points or LAN switches to filter access to allowed hosts. | MAC address filtering is difficult to manage, provides no tangible security benefit, since spoofing is trivial, and it increases administrative burden [6][7]. |

The advantages in “favouring” user convenience come back to the fact that we are dealing with humans. Research shows that when cognitive load increases, users naturally compensate with shortcuts, despite knowing the consequences [8].

If the security controls we have designed and implemented are too onerous and overwhelm the user who is “just trying to do their job”, they are naturally drawn to security-defeating shortcuts and workarounds.

These often happen not due to ill intent, but simply the result of cognitive overload leading to risky behaviour. Table 2 shows some of the examples.

| Cognitive Overload Cause Example | High Risk Behaviour |

|---|---|

The system prompts the user to change the password again. The user is rushing to sign in to complete his task. | Write down the new password somewhere, or worse, email the password to his personal email account. |

It is too difficult for the vendor support personnel to remotely access the OT system. The security systems do not work or are too difficult to use, and the user is under pressure to complete a substation commissioning project. | Use a temporary remote-control session (via commonly available remote support tools) to enable support personnel to remotely control internal systems via screen sharing. |

It’s late into the evening, and OT operations staff needs to install some tools provided on a USB disk by the vendor, to troubleshoot the HMI computer. There is a secure file transfer gateway to transfer files from Corporate to OT, but it is too difficult to use or takes too much time to transfer the files. | The OT operations staff inserts the USB drive of uncertain origin into the computer. |

There is an emergency that requires the CEO of a power utility to access sensitive company information. Normally, he would be provided a company-issued cellular hotspot, but the hotspot is not travel-friendly and sometimes fails to connect. | Under pressure, he/she connects to the public airport Wi-Fi to VPN into the company network. |

In all of the examples above, there could probably be security controls that can be applied to prevent the high-risk behaviour from occurring. However, the user would find more workarounds or simply becomes frustrated with difficulty getting the job done causing decreased productivity.

Therefore, in cybersecurity, an effective implementation should always take into account human behaviour, and by introducing the minimum possible friction that sufficiently addresses the risks at hand. This reduces the chances and the need for users to take these security-compromising shortcuts.

How do we design secure systems that reduce friction for users? I believe we can learn from the design industry, where the user is the central focus of their design.

Industrial design and UX (User Experience) design are two related concepts that put the human experience central. Industrial design focuses on user-centric design of products that balances function, usability and aesthetics. I think of UX as the overall effectiveness and ease of use when interacting with a system. A good UX makes a task seamless, reducing the mental friction that can lead to mistakes, especially under pressure.

Henry Dreyfuss, a pioneer in industrial design, said that “If the point of contact between the product and people becomes a point of friction, then the industrial designer has failed” [9]. Jared Spool, a pioneer in UX, said that “Good design, when it’s done well, becomes invisible. It’s only when it’s done poorly that we notice it.”

Therefore, it is my belief that for a security system to be successful and productive, it needs to balance the risks with the extent of security controls implemented, and those controls must be user-centric.

In our industry, it is even more critical to adopt user-centric security, as any user-implemented workarounds, shadow IT, or non-failsafe designs, may result in serious consequences.

If you’re reading this article, chances are that you are working for an organisation in the power system supply chain – be it a Transmission System Operator, Distribution System Operator, a power system equipment supplier, market operator, power generator, or someone who has an interest in the energy transition.

Many of you would be operating in the critical infrastructure in OT and ICS, and if you have responsibility in implementing cybersecurity, think of your diverse groups of users – the protection engineers, market system analysts, SCADA operators, and the various other types of users you have in your organisation. Getting their input and inviting their feedback during the design and testing phases of any security implementations and policies that affect them is crucial for a successful, productive and risk-balanced cybersecurity implementation.

Table 3 shows how the user-centric principles of UX and industrial design can be used in alignment with user-centric security.

| User-Centric Design Element | UX Design | Industrial Design | User-Centric Cybersecurity |

|---|---|---|---|

Empathy | Understand users’ behaviours, motivations, and frustrations through interviews and journey mapping. | Observe how people interact with their physical environment to design seamless, practical products. | Understanding a user's workflow, security knowledge, and priorities to design security measures that are helpful, not disruptive, and that people will actually use. Example: If USB blocking is to be implemented, provide user-friendly secure file transfer gateways or removable-media kiosks for engineers bringing in patches; for lower risk scenarios where a computer’s USB port is enabled, allow only company-issued secure USB devices combined with stringent on-device scanning. |

Usability | Make digital products easy to learn and efficient to use. | Ensure physical products are comfortable, safe, and ergonomic. | Apply “usable security” so controls don’t invite workarounds. Examples: Multi-factor authentication that works reliably on remote access jump servers without breaking field workflows; simple badge + PIN access to SCADA terminals; Role-based access in an industrial SIEM where dashboards are pre-built for operators vs. security analysts, so operators don’t drown in irrelevant data. |

Accessibility | Design for inclusivity (e.g., WCAG standards for screen readers and keyboard navigation). | Create products usable by a wide range of physical abilities. | Ensure all users can interact with security features. Examples: Clearly readable security banners on login screens (if the text is too small and too long, people are not going to read it); providing touchscreen + hardware button fallbacks for PPE-clad operators for entering access credentials. |

Feedback / Iteration | Provide cues like button highlights, spinners, or “message sent” confirmations. | Provide tactile/auditory feedback (e.g., a click, beep, or LED). | Clearly communicate security status and outcomes. Invite user feedback and iterate design for continuous improvements. Examples: Clear remote access login failure messages (did it fail because the antivirus is out of date, or if the user’s MFA token code is incorrect?); pilot-testing security controls with operators before rollout and obtain user feedback periodically for enhancements. |

Simplicity/Clarity | Reduce cognitive load with clean layouts and intuitive flows. | Use clear form to show function (e.g., scissors need no manual). | Present security warnings and choices in plain, non-technical language. Examples: Phishing warnings on email messages in simple terms; traffic-light style security dashboard in a control centre instead of complex logs; where the risk warrants, issue company laptop with a managed eSIM that automatically and transparently connects back to the Corporate VPN for travelling staff, removing the need for staff to connect to public Wi-Fi; fail-safe security systems that provide simple and reliable access during an emergency. |

As you can see from the above examples, user-centric cybersecurity always considers the risks which determine the level of security controls to be implemented, and then combines it with the user-centric principles. This will, in my opinion, result in better-implemented cybersecurity initiatives, reducing the impact on productivity while maintaining the required security, and keeping your users more effective and satisfied.

Want to go further? Discover related Technical Brochures on eCIGRE

► TB 796: Cybersecurity: Future threats and impact on electric power utility organizations and operations

► TB 840: Electric Power Utilities' Cybersecurity for Contingency Operations

► TB 762: Remote service security requirement objectives

► TB 698: Framework for EPU operators to manage the response to a cyber-initiated threat to their critical infrastructure

References

- National Institute of Standards and Technology, “NIST SP 800-63B,” 2017.

- National Cyber Security Centre (UK), “The problems with forcing regular password expiry,” 2016.

- Australian Signals Directorate, “Information Security Manual,” 2024.

- K. R, “The logic behind three random words,” NCSC UK, 2021.

- National Institute of Standards and Technology, “NIST SP 800-63B-4 Digital Identity Guidelines,” 2025.

- Australian Signals Directorate, “Guidelines for networking,” 2025.

- National Security Agency, “Network Infrastructure Security Guide,” 2023.

- J. W. Beck, A. A. Scholer and A. M. Schmidt, “Workload, Risks, and Goal Framing as Antecedents of Shortcut Behaviors,” Journal of Business and Psychology, 2016.

- H. Dreyfuss, “Designing for People,” 1955.

- ncsc.gov.uk, 2022 [Online].

Thumbnail credit: Nika on Lummi