The AI debate needs balance - The power systems industry should help

If there’s one thing power system professionals know, it’s balance. Balance is the essence of all that we do: balancing supply and demand, and creating innovative solutions that balance the three aspects of the energy trilemma: affordability, sustainability, and reliability.

This experience with balance is sorely needed in the often-polarizing Artificial Intelligence (AI) debate, a debate in which energy industries professionals so far have stayed mostly on the sidelines, letting technocrats and government officials take the reins. Yet power systems are literally at the center of this debate: data centers, where AI models are trained, put additional load on already-strained power grids, and grid owners and operators have to find ways to power this growing demand. Grid operators are also on the other side of the equation, as users of AI.

Using AI

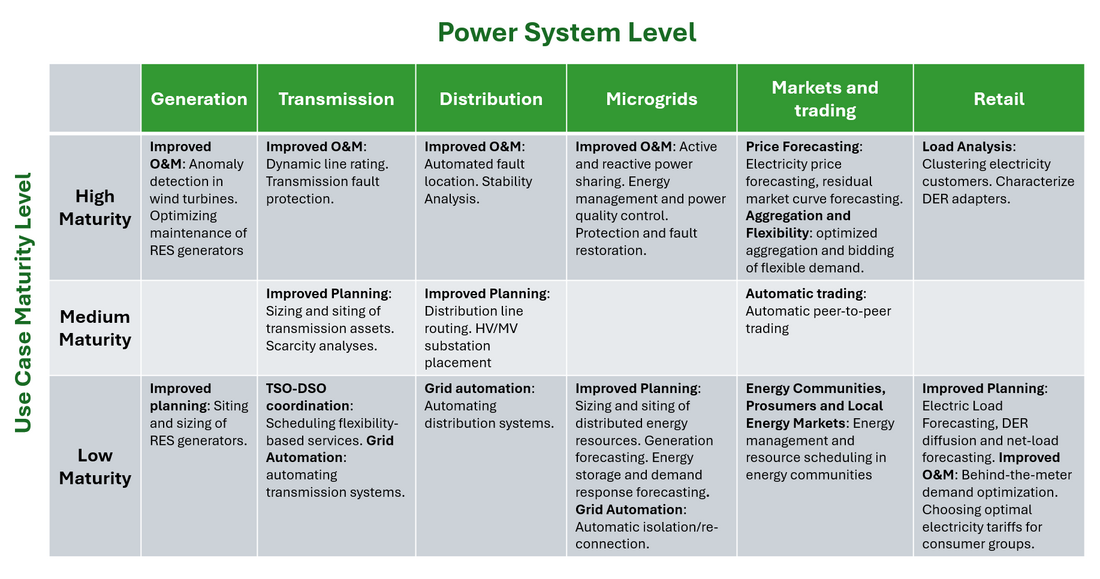

Companies up and down the power systems value chain use traditional AI methods to improve their forecasting models, plan their operations, and improve resiliency. The following table shows the maturity of AI use cases across all areas of power systems, approximated by mentions of these use cases in literature.

Source: Reviewing 40 years of artificial intelligence applied to power systems – A taxonomic perspective

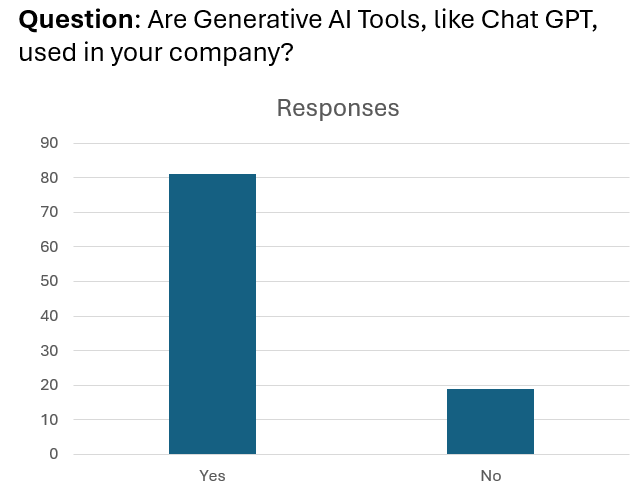

Power systems professionals are also embracing new AI techniques like Generative AI, which is primarily used for generating text and images. At the D2 General Discussion Meeting at last year’s CIGRE Paris Session, a live poll asked over 200 attendees if they used Generative AI tools like ChatGPT at work. Over 80% said they did. This means that a vast majority of power systems companies are relying on AI even for completing corporate tasks like drafting contracts, taking meeting notes, and summarizing emails.

Source: CIGRE Paris Session 2024 D2 GDM live poll

A recent taxonomic review of papers on AI in Power Systems shows an exponential increase in mentions of AI for power systems, rising from 5,000 papers in 2005 to 10,000 in 2010 and over 25,000 in 2023.

As power systems become larger and more complex with the addition of ever more electrified devices and the move toward prosumer-based energy systems, we can expect the use of AI in power systems to continue on this strong growth trajectory.

Powering the AI revolution

AI use is not only increasing in our industry. Across all industries, from healthcare to finance, AI applications are exploding. This boom in AI is made possible by us power systems professionals. Worldwide, datacenters where AI models are trained already use 60 GW of power. This yearly demand is expected to double or triple to between 171 and 219 GW in the next 5 years, according to an analysis by McKinsey. This represents an increase greater than the current peak load of Korea (90 GW). From this point of view, AI is like any other additional load on power grids, which are already strained. Grid interconnection queues are getting longer, with all types of load and generation projects stalled. This increases costs for all consumers and sets back carbon neutrality goals. Coal and gas-fired power plants are having their lives extended, in part to power this AI boom. It will be increasingly difficult to convince homeowners and consumers to make lifestyle changes like switching to electric cars or heating their homes with electric heat pumps when the energy used to power these changes come from fossil sources, and when they see massive new loads like data centers moving onto the grid and “canceling out” their sustainability efforts. We have one grid, and even with power-purchasing agreements like those piloted by Google, more load of any kind adds to the energy that must be covered by increasing supply.

Our Role as Power System Professionals

To meet our global climate goals from the Paris Climate Agreement, any additional demand as large as that of AI-driven data center growth should be scrutinized and compared against other vital loads, like electrifying industry and expanding electric-powered transportation. Here, frameworks like cost-benefit analysis with environmental impact assessment can be of service. Complying with new AI standards, such as ISO 42001, is also recommended for continually assessing the risks and benefits of AI use cases.

For data center loads that are deemed necessary to bring on to the grid when compared against other important interconnections, grid operators should push hyperscalers (massive tech companies leading the AI revolution, like Google and Microsoft) and other builders of data centers to assure that the power requirements of their new data centers are not just covered with new sustainable generation, but that at least double that capacity is installed to power other loads and continue the energy transition. We should also work with hyperscalers and other technology companies on solutions for making AI model training and other data center workloads more flexible.

In our own work building and running electric power systems, we should push to use AI responsibly and question the use cases for AI in our companies.

We should focus on use cases that bring value to energy systems and our end customers while retaining the dignity of work. This means piloting new means of AI development, like participatory design, and questioning the necessity of using extremely energy-inefficient AI models like Large Language Models (LLMs) for tasks of questionable importance and value, like summarizing emails or drafting texts (this article was proudly written without the use of AI). Energy efficiency principles in building design and energy management tell us to first consider if the energy use is at all necessary, or if it can be avoided, as with passive design. We should apply these principles to AI use cases as well. AI is not a panacea. We should target its use for application areas that have high return on investment when accounting for full model training and inference costs and externalities like extended fossil-generation life spans. Power systems professionals should learn more about how AI works to actively contribute to debates about its proper use. Two CIGRE Working Groups in Study Committee D2, D2.52 and D2.59, are working on technical brochures on this topic. SC D2.52 is writing a Technical Brochure introducing power systems professionals to AI and its use in our industry, and SC D2.59’s Technical Brochure focuses on novel AI methods, like generative models. New collaborative working groups across study committees are also working on Technical Brochures that explore the application of AI to specific areas, such as the joint working group A3/D2 on Applications of Digital Twins in Switchgear. More CIGRE Joint Working Groups across Study Committees following this example will help focus AI development on use cases that have the most benefit for power system operations.

Conclusion

AI, like all tools and technologies, is not all good nor all bad. We power systems professionals can help tip the scales in the positive direction by using AI for high-value tasks in our own companies, and pushing for responsible expansion of AI that has positive effects on the communities we serve. It’s a hard balancing act. But we succeed at hard balancing acts every day, with the proof in lights kept on and industries powered. We can and should take a more active role in balancing the AI debate.

About the Author

Rachel Berryman is a Senior Innovation Manager and AI Lab Lead based in Berlin, Germany. A data scientist by training, she has 8 years of experience in AI for power systems. She holds a master’s degree in Sustainable Development and a master’s in Computer Science, where her thesis presented a framework for ethical AI, applying stoic ethics to the AI model training process. She is an Advisory Group Secretary for SC D2 and active in the Working Group SC D2.52.

Banner & thumbnail credit: MengWen Guo on iStock